For the past month, I worked on a Driven Data Competition - Predicting Poverty alongside Shadie Khubba (Yale Statistics MA '17). This post is a detailing of our results (top 10% finish ~ 200 place of 2200 contestants), code, and learnings.

The code for our best models can be found on our repo.

Motivation

Shadie and I had met in a Statistical Case Studies class that involved weekly hack sessions of solving an amorphous problem (e.g. predict New Haven real estate prices from online data). We figured this Driven Data Competition was our attempt to prove our chops in the real world and get more familiar with the Python data stack as opposed to academia's R. Plus the bragging rights and possible $ if we won.

The Problem

According to the competition website, predicting poverty from survey data is a hard problem. We were given survey response data from 3 anonymized countries (A, B & C), at both the household and individual level. Predictions were done at the household level, with each household having a 1:n relationship with individuals.

Scoring was done with a mean log loss of the three, with our predictions being offered as probabilities between 0-1 for each household being classified as poor. A baseline naive score with uniform 0.5 probability would score 0.69.

Early Learnings

We spent our first two weeks using a traditional approach; conducting exploratory data analysis and throwing some standard classifiers at the data. EDA did not prove particularly useful since each column had its data scrambled and normalized. However, two challenging issues emerged from looking at the dataset. First, the classes were imbalanced in countries B and C; the ratio of poor households to non-poor was 1:12. Second, the majority( >90%) of predictors were categorical; with several having large sets of up to 80 possible values.

We initially began throwing traditional methods at the problem: SVM, logistic regression, boosting and building off the random forest model benchmark (scored at .55).

Organizationally, we started with a GitHub repo that quickly turned into a mess. While we did organize our work with all data files/submission files saved, it quickly grew apparent that restructuring our code was inefficient. Shadie and I were working in our separate .py files, and often rewriting or re-running models locally ourselves. We didn't spend enough time planning a project structure early on; later I found out about the cookie-cutter data science project, but it was too late to implement a new project structure.

Inherent Challenges

We solved some minor problems with imbalanced classes (resampling) and missing values (imputation) but we really struggled with two major problems.

Categorical Variables

We needed a way to convert the categorical variables into numerical data such that scikit learn could actually use it as an input space. We started with one hot encoding (turning each possible value of a categorical predictor into a new 0/1 predictor), but realized it was blowing up dimensionality of the feature space, so much so that using only the continuous variables for prediction (a small fraction of the original feature space) yielded much better results (using the continuous variables and logistic regression brought us to a competitive score of 0.27, at a time when the scoreboard leaders were in the neighborhood of 0.15). Our 50 categorical variables were turning into 500 binary variables and the curse of dimensionality was taking effect: additional useless variables being added in and getting some assignment of weight. We spent time early on trying to find the right heuristics to reduce the dimensionality while not discarding potentially useful features.

Utilizing Individual Data

We began by using household data. Incorporating summary statistics (e.g. mean, range max, min) of predictions on individual data actually worsened prediction performance. Our hypothesis here is that individual predictions were offering a noisier version of what the household data already provided. Posts on the forum suggested that this was a common hurdle for the entire competitor field - many people were getting .17-.18 results by only using the household data.

CatBoost

We made a lucky breakthrough into the top 10% with a discovery of CatBoost. CatBoost is a GBM variant made by Russian search giant Yandex, and its killer feature is native support for categorical variables (hence the name categorical boosting = catboost). CatBoost is able to use statistical methods to selectively keep the most predictive values in each categorical column; saving much tedious cleaning on our end.

Our first submission here brought us in the top 8% of submissions, showing that boosting is the way to go. This dramatic improvement came solely from avoiding the curse of dimensionality and implementing a boosting algorithm (that is designed to require very little tuning)!

However, after incorporating Catboost we hit a plateau and were only able to eke out minor gains thereafter. We tried numerous feature engineering approaches for the individual data but were stumped. Our group ended up in the 200th place of over 2200 competitors though, so not a bad showing for our first time out.

Lessons Learned

We learned a lot of neat technical tricks and tools (cookiecutter, catboost, scikit learn goodies like pipeline), but the real learnings are far more generally applicable.

Try Boosting First

We spent a lot of time tossing models that didn't pan out before we arrived at the correct one. From the post-competition discussion boards, we realized that many kaggle-vets immediately tried industry standard models (XGBoost, LightBoost, CatBoost) as their first model as a matter of habit. Readings online support the idea that boosting is the go-to black box solution.

Feature Engineering is Everything

We couldn't get beyond our initial gain from using CatBoost by adding in individual data, though we tried many different approaches ranging from feature selection based off importance, predictions from individual data as features and more.

The big question was how to build features from the individual data. One of the top 3 finishers posted his feature set, and it turned out to be ridiculously simple: counting positive and negative values, the id of individuals (suggesting some ordering to household), sum of continuous values, and a count of unique categorical values.

Build a Testing Harness

A major challenge here was that we underestimated the limitations imposed by the two submissions per day. We were foolish and started off building models and then submitting to validate results. Only after many unfortunate submissions did we build out a suite of validation tools to rigorously test locally first. By the end of the first two weeks, we were running 3-fold cross validation, and eyeballing confusion matrices and predicted probability distributions. We started by treating the competition's submissions as a last validation step, not as a first one.

Next Time & Application

I'm optimistic that the next time we partake in a kaggle-style competition, we could place much higher if we had course corrected on these three things. Instead of scrambling to build out a testing harness with two weeks left, or furiously creating additional features in the final days, we could have comfortably spent 3 weeks feature engineering. The competition often felt like the xkcd comic below, but if we did it again, one would hope the pile of linear algebra would be less messy, and the results easier to check.

A final takeaway for both of us is that real data science life is not a kaggle-style competition. Online competitions push for a best solution; day-to-day work demands the "good enough" solution.

I'd imagine that the World Bank, which sponsored this competition, would have been equally happy to know that household data and an out of the box GBM produce a solution within 0.04 of the winning one. Putting together the $15,000 prize and paying DrivenData to run the competition shows they likely didn't know this from the get go. Clearly, some quick wins still to be found in social sector data challenges.

After taking a couple optimization classes at the School of Management and School of Statistics, I've been thinking about problems from an optimization lens. One such problem I spend too much time on is picking lineups for my grad rugby team. The night before a game, I confer with the other leaders of the squad to determine what the strongest line up will be. We discuss various groups, mixing players in different positions and the talk usually takes an hour.

The decision is based off player ability and practice attendance, and rooted in our qualitative feelings. I began thinking of how the problem could be cast quantitatively and if so, if I could build a decision making tool.

An hour of research (ie google) showed that this is actually a long solved problem in computer science: the assignment problem. Simply put, it is the task of minimizing the cost of assigning n workers to m jobs, where each worker i for every job j has a cost(i,j). Turns out it is an common industry application. For example, how can Uber minimize total customer wait time given a set of drivers and available jobs?

Many solution methods for the assignment problem are out there, but the simplest is the Hungarian Algorithm, and there is a one line SciPy implementation already. As always, amazed by how impressive the Python open source data stack is.

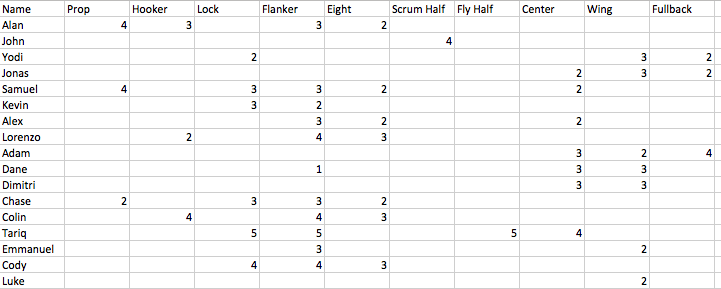

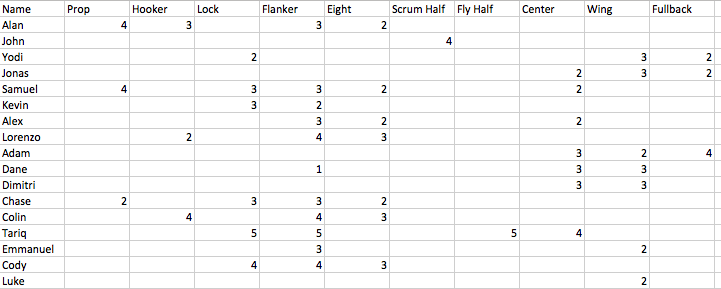

My rugby problem restated is thus maximizing the total team performance through assignment of n players to m=15 positions, where each player has a positional score for position. This knowledge is largely implicit in our captains' discussion, so not too much more work to put it into a csv file. This file is the performance matrix: each player can play a subset at of the positions at varying levels (0 - not at all, 3 - basic knowledge and practice, 5 - years of varsity level positional experience).

The sum of values for the selected players is the total team performance metric, and maximizing this is the objective function.

Code

I wrote an object that stores the players and positions and automates the initial selection as well as reselection for any potential injuries. It keeps track of the team's total performance score as well.

import numpy as np

from scipy.optimize import linear_sum_assignment

import pandas as pd

class Selections(object):

"""An object to optimally select a starting team given a performance csv."""

def __init__(self, data):

"""Read in data, csv needs to be to player-column and row-position. No need

for duplication"""

# fills all empty elements with 0

self.data = pd.read_csv(data, index_col=0).fillna(0)

self._duplicate_cols()

self.data = self.data.transpose()

self.orig_cost = self.data.values*-1

self.cost = self.orig_cost

# retrieve list of players and positions

self.positions = self.data.index.tolist()

self.players = self.data.columns.tolist()

self.starting_lineup = {}

self.starting_score = 0

self.current_lineup = {}

self.current_score = 0

def _duplicate_cols(self,

names=['Prop', 'Lock', 'Flanker', 'Center', 'Wing']):

"""Duplicate rows where there exist two spots on the field"""

for name in names:

second_position = name+'2'

self.data[second_position] = self.data[name]

# alphabetize columns

self.data = self.data.reindex_axis(sorted(self.data.columns), axis=1)

return

def _create_lineup(self, rows, cols):

"""Returns a dictionary of positions keys and player values"""

selections = {}

for row, col in zip(rows, cols):

position, player = self.positions[row], self.players[col]

selections[position] = player

return selections

def pick_lineup(self, starting=True):

"""Solves lineup selection with hungarian algorithm"""

if starting is True:

self.reset()

rows, cols = linear_sum_assignment(self.orig_cost)

self.starting_score = self._team_score(self.orig_cost, rows, cols)

self.starting_lineup = self._create_lineup(rows, cols)

return self.starting_lineup

else:

rows, cols = linear_sum_assignment(self.cost)

self.current_score = self._team_score(self.cost, rows, cols)

self.current_lineup = self._create_lineup(rows, cols)

return self.current_lineup

def substitute_selection(self, player_list):

"""Remove a given player and reruns the selection from remaining player

pool"""

for player in player_list:

player_index = self.players.index(player)

self.players.remove(player)

self.cost = np.delete(self.cost, player_index, 1)

current_lineup = self.pick_lineup(starting=False)

return current_lineup

def reset(self):

"""Reset the selection object to its original state"""

self.orig_cost = self.data.values*-1

self.cost = self.orig_cost

# retrieve list of players and positions

self.positions = self.data.index.tolist()

self.players = self.data.columns.tolist()

self.starting_lineup = {}

self.reserve_players = {}

def _team_score(self, cost, rows, cols):

"""Display the team total score"""

return cost[rows, cols].sum() * - 1

Example Use

Out[2]:

{'Center': 'Kyle S',

'Center2': 'Fish',

'Eight': 'Alex W',

'Flanker': 'Cody',

'Flanker2': 'Lorenzo',

'Fly Half': 'Tariq',

'Fullback': 'Adam',

'Hooker': 'Colin',

'Lock': 'Cam',

'Lock2': 'Kevin',

'Prop': 'Samuel',

'Prop2': 'Alan',

'Scrum Half': 'John',

'Wing': 'Jonas',

'Wing2': 'Yodi'}

Out[4]:

{'Center': 'Kyle S',

'Center2': 'Dane',

'Eight': 'Alex W',

'Flanker': 'Cody',

'Flanker2': 'Lorenzo',

'Fly Half': 'Fish',

'Fullback': 'Adam',

'Hooker': 'Colin',

'Lock': 'Cam',

'Lock2': 'Kevin',

'Prop': 'Chase',

'Prop2': 'Samuel',

'Scrum Half': 'Thomas',

'Wing': 'Jonas',

'Wing2': 'Yodi'}

Conclusion

"all models are wrong, but some are more wrong than others."

This model attempts to get at very basic challenge of selecting teams, and does so. However, it misses many more complicating factors such as picking teams based off opposition (ie selecting for speed thematically or interaction between two players who play particularly well together). Furthermore, if there are multiple optimal teams it only shows one.

Many of these issue are feasible to be coded in, but that would probably cost me more time to refactor and rewrite. Plus the healthy debate among captains and coach in selecting and thinking through lineups is half the fun. Should be even more fun now that we have a decision making tool now.

This personal site is built (and now rebuilt) with exclusively open source tools: pelican with a blue penguin theme, a github personal page, and jupyter notebooks. I'm a big fan of not reinventing the wheel, so I spent as much time picking the simplest and generally, most popular tools. I've found this combination maximizes my time sharing content, and minimizes the site maintanence.

Pelican

A python blogging package. At a high level, this package enables you to write content in markdown files (.rst or .md) and then converts them to html files. These html files are what's pushed online, and serve up static content. It's far simpler to use for a project like this than something designed for enterprise like Django (which I tried first). Best of all, it seems to have gotten more popular over time as evidenced by the number of stars and forks on Github.

There's tons of guides out there already, but I used these two in conjunction with the docs to get me started.

PBPython - An excellent beginner's guide but sacrificing some technical depth for the sake of readability.

Christine Doig's Guide -

Most thorough one I've found, but can be a little overwhelming until you familiarize yourself with the package's functionality.

Blue Penguin Theme

A beautiful minimalist theme designed by Jody Frankowski specifically for Pelican. It's barebones and actually removes much of pelican's features (ex: the social media bar) in favor of a very clean, modern look.

Github personal pages

In keeping with the idea to keep it as simple as possible, I choose to make use of Github's personal pages feature . Each account is given one for free, and has a file limit of 1 GB (I'm using about 2% of that). You just need to setup a repo, and you can push directly there. I've configured mine to also redirect to my custom url of alanzzhao.com instead of alanzzhao.github.io.

Even better, pelican has native support for this type of hosting along with Amazon S3 and Dropbox.

Jupyter Notebooks

Jupyter notebooks are the tool of choice for interactive computing with Python, and increasing for other langauges. They're driven by the powerful idea that data analysis is best served when it comes with insights and the ability for the consumer to experiment further. I wanted to use it as a format to easily share code, visuals, and analysis. Jupyter cuts out the cumbersome tasks of copying and pasting all of those components into a separate markdown file. For the curious, I'll also link the notebooks to be available on Github for reproducibility.

Some blogs write the entire site's content in Jupyter notebooks. Jake Vanderplas's site is an amazing example. From the site format, I strongly suspect he's running pelican as well.

Using Jupyter as a pelican content generation format is easy: it's plugin for pelican . Just install and activate.

Below are some code snippets showing how versatile this platform is (and to prove to myself this worked). Seaborn examples shamelessly taken from the library documentation. Visuals show how three species of flowers are differentiated by characteristics.